Wrapping your head around your data can be difficult sometimes. There can be a lot of it to examine and analyse before you can extract the information that you desire. However something that is commonly overlooked is the issue of trust. It is very easy for us to look at our data and take it all for granted that it is correct and accurate. For the most part it will be, but today we are going to look at the areas where it is not. By default your Google Analytics will accept and record all traffic coming to your website. This sounds good, except that some of that traffic might not be entirely legitimate or trustworthy.

What can influence my data?

A small portion of your traffic (or more than you may like to admit) probably comes from less than useful sources. They have quite a few different names known across the web. The most common varieties are bots, crawlers and various kinds of spam. You may have heard these mentioned before and never quite knew exactly what they represent. You may not even know that you are affected by them at all. They fall under the same umbrella of being a data nuisance, but let’s look at what makes each one unique.

Bots

Bots (or robots) are automated services that interact with your website in some way. They are not directly controlled by real people, but are rather activated and repeat a set of tasks. This may be visiting different websites or checking to see if content has been added. They also update various live services such as news and blog feeds among other tasks.

Web Crawlers

Also known as spiders, these are very similar to bots, these are automated software that “crawls” through your website, performing a task known as indexing. Normally these are used by search engines, such as Google, Bing or Yahoo. These will normally have a look at the pages on your website and index information it discovers to make your website easier to find through a search engine. Depending on settings, these can count as interactions or incoming traffic. Excessive crawling can take place when a crawler lacks instructions, resulting in many interactions with your website.

Spam

Not be confused with any kind of canned meat, spam is unwanted and useless interactions with your website. It can be done via a bot or manually by a real person, making it difficult to predict and defend against. In ideal circumstances it would not be a problem, but various legitimate tools combined with malicious intent makes spam quite an issue for data accuracy.

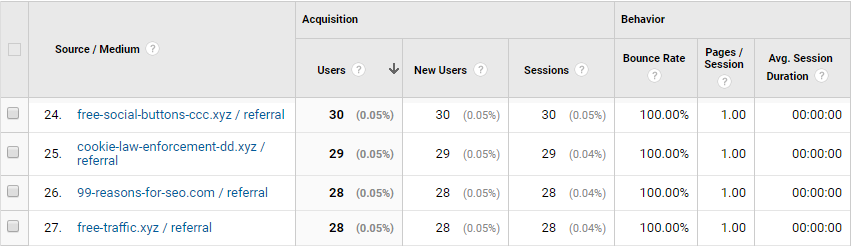

Referral Traffic Spam

One particular type of spam is a problem for a lot of websites. Referral traffic is very valuable as it is essentially providing you with pre-qualified visitors from other relevant websites. This traffic should be very good for completing goals and generating transactions. However, in many cases if left unchecked, the majority of this traffic (sometimes even over 80%) can end up being just useless spam. Usually performed by bots, this can greatly affect how your traffic reports may look performance wise. Because of this, it is one of the things we at Aillum recommend looking into specifically.

How does this affect your data accuracy?

None of these traffic types are inherently bad or malicious. Bots and crawlers are actually normally very good things to have when used properly. They can keep your website organised and properly integrated with other relevant content beyond your own domain. The problem is that not everyone has such good intentions and this results in bad traffic. Bots, crawlers and spam can all inflate your pageviews, make your bounce rates appear much higher, drive down goal completion rates on your website and more. This has a further knock-on effect by skewing the perception of how your website is actually performing because all website visits are recorded in your data by default.

Bots and spam are even more of an issue if you have a small or medium sized website. They can be indiscriminate when choosing where to attack and may end up accounting for a large percentage of traffic on a small website. This results in a large portion of your data that is unreliable.

What actions can be taken?

Bots and spam can be quite intrusive to a website and it’s data. There are methods to prevent bots and spam by adjusting settings, applying filters and more. We’ll look at some simple examples on how to do that in more detail in a future blog post, but for now do check your data thoroughly to see if anything looks suspicious. Even if you have already dealt with bots and spam before, staying vigilant is key as they may have returned in force since you last looked.